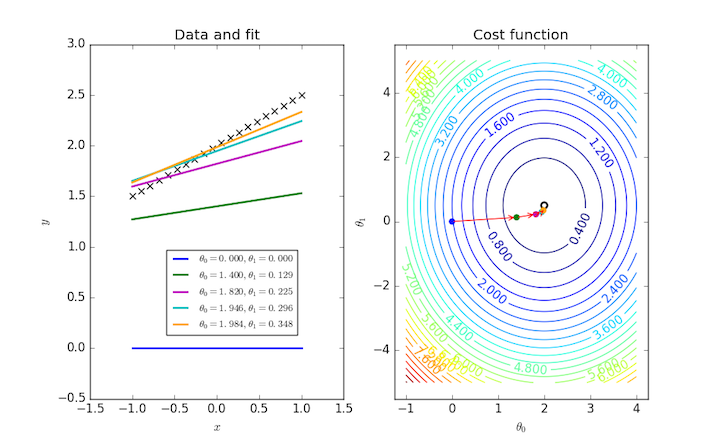

optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange

Por um escritor misterioso

Descrição

I have a function,

$$f(\mathbf{x})=x_1^2+4x_2^2-4x_1-8x_2,$$

which can also be expressed as

$$f(\mathbf{x})=(x_1-2)^2+4(x_2-1)^2-8.$$

I've deduced the minimizer $\mathbf{x^*}$ as $(2,1)$ with $f^*

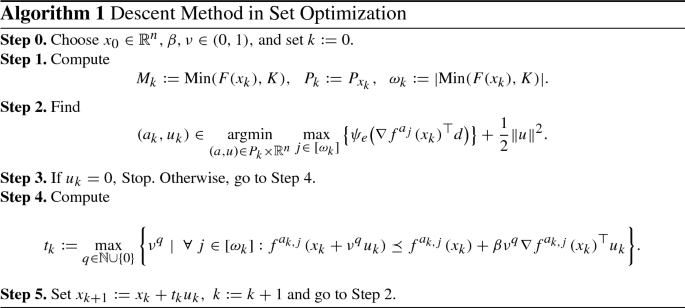

Mathematics, Free Full-Text

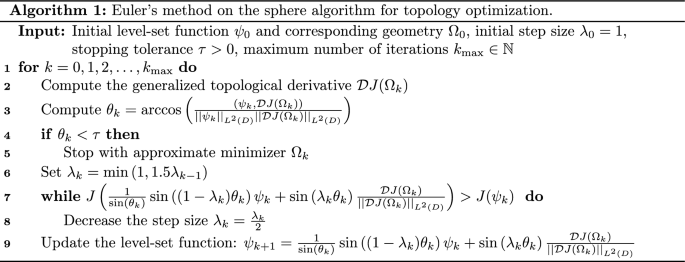

Quasi-Newton methods for topology optimization using a level-set method

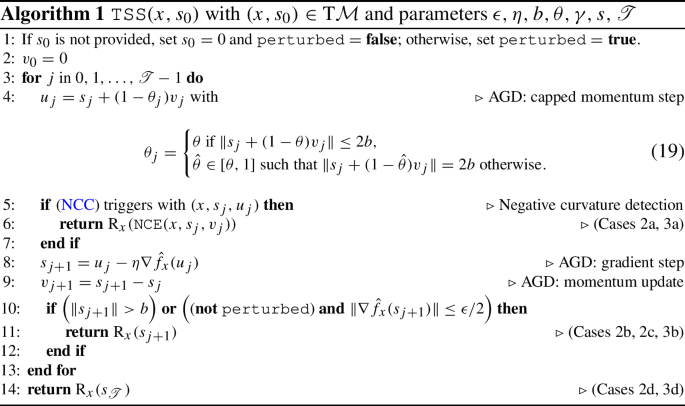

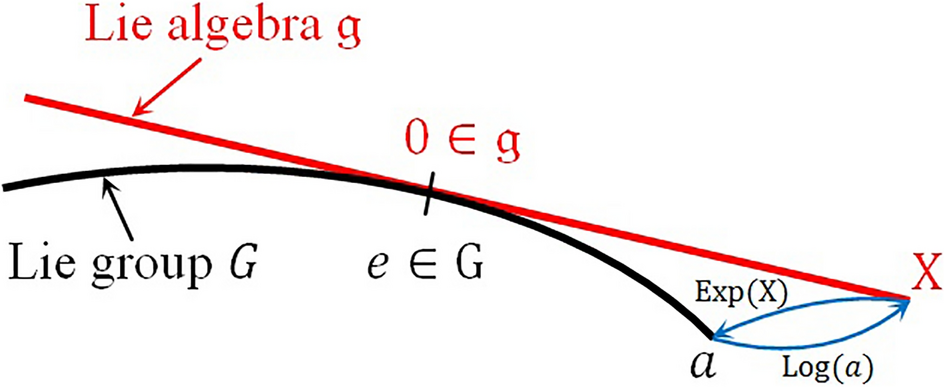

An Accelerated First-Order Method for Non-convex Optimization on Manifolds

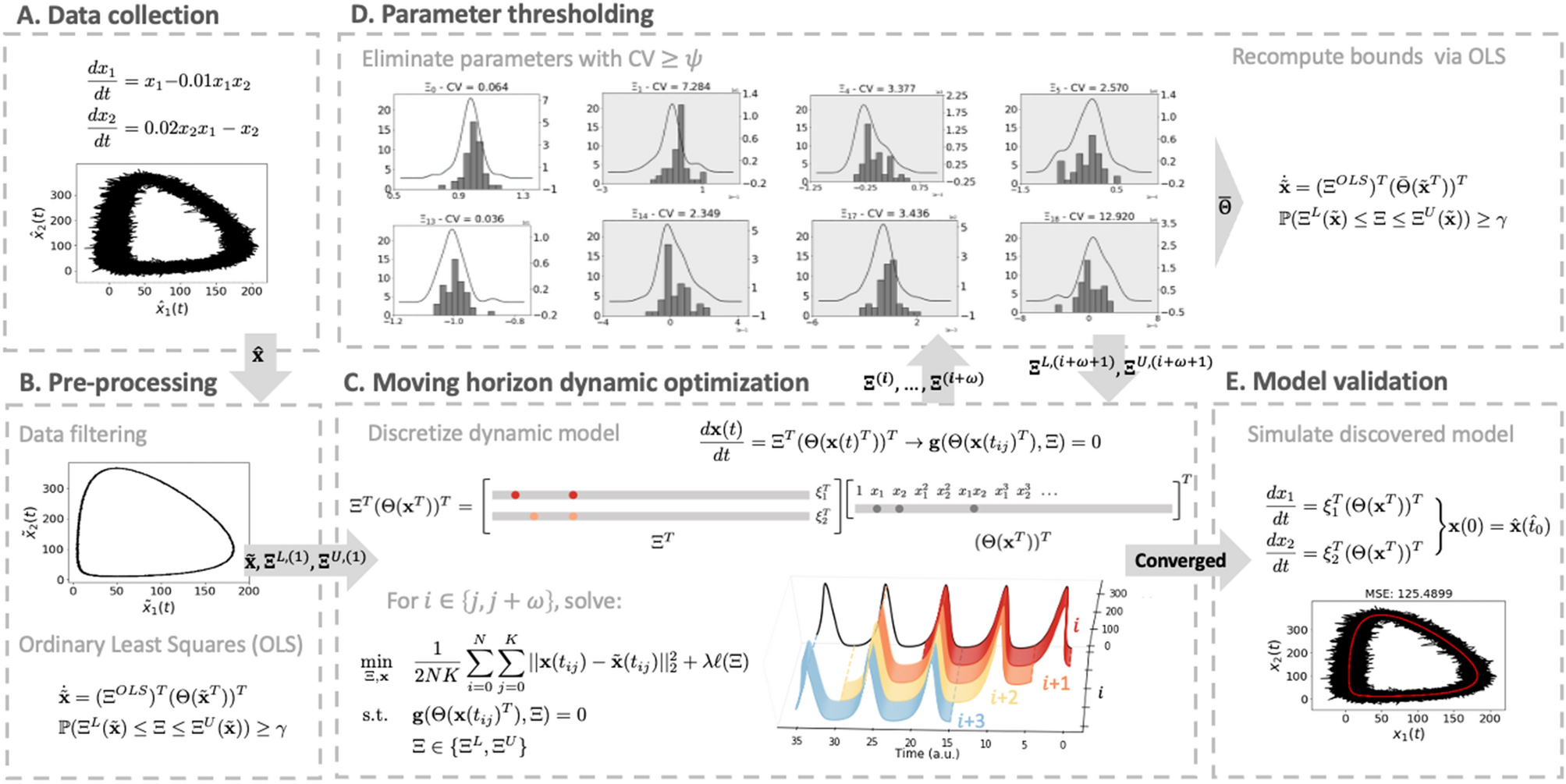

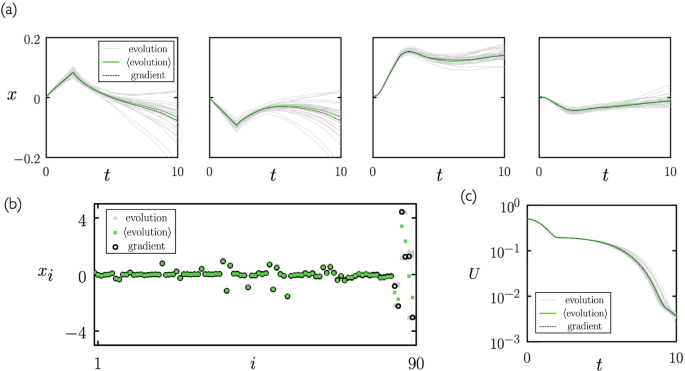

Data-driven discovery of the governing equations of dynamical systems via moving horizon optimization

gradient descent - How to Initialize Values for Optimization Algorithms? - Cross Validated

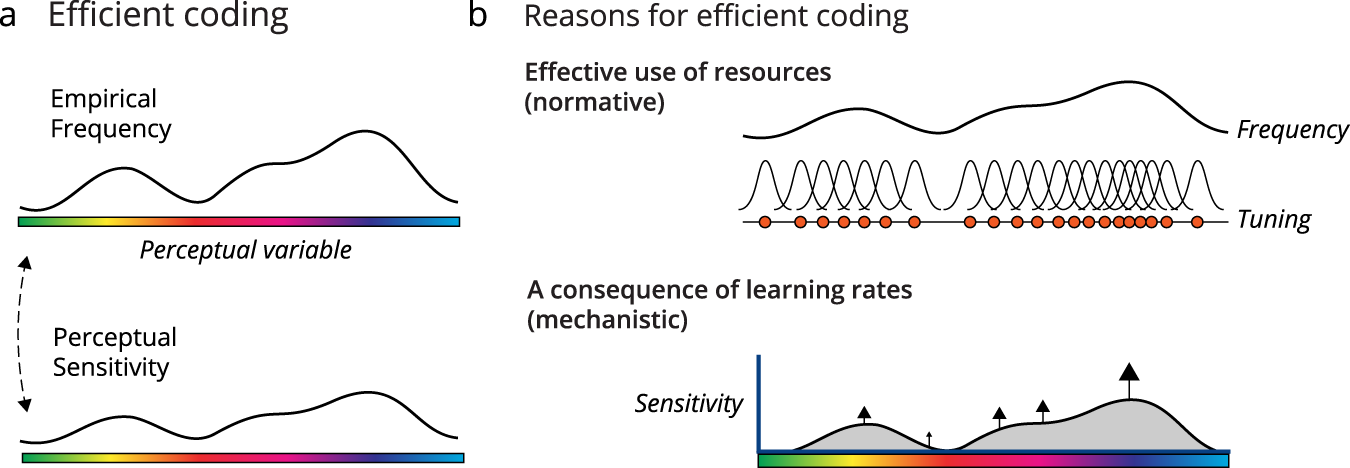

Efficient neural codes naturally emerge through gradient descent learning

Steepest Descent Method - an overview

Illustration of the TOBS with geometry trimming procedure (TOBS-GT)

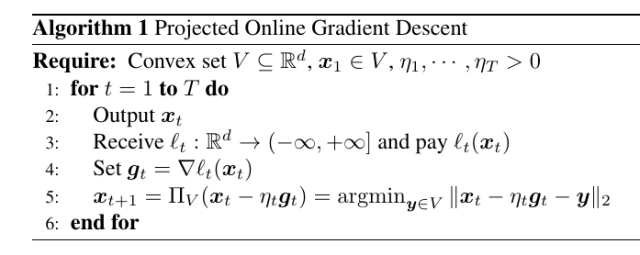

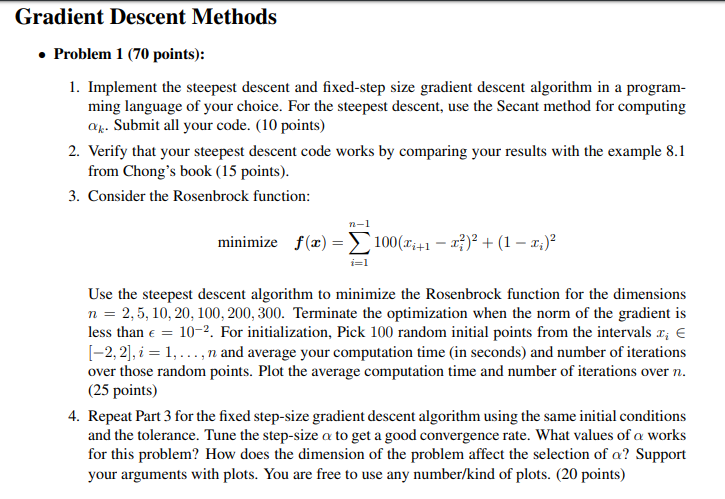

Gradient Descent Methods . Problem 1 (70 points): 1.

Fast gradient algorithm for complex ICA and its application to the MIMO systems

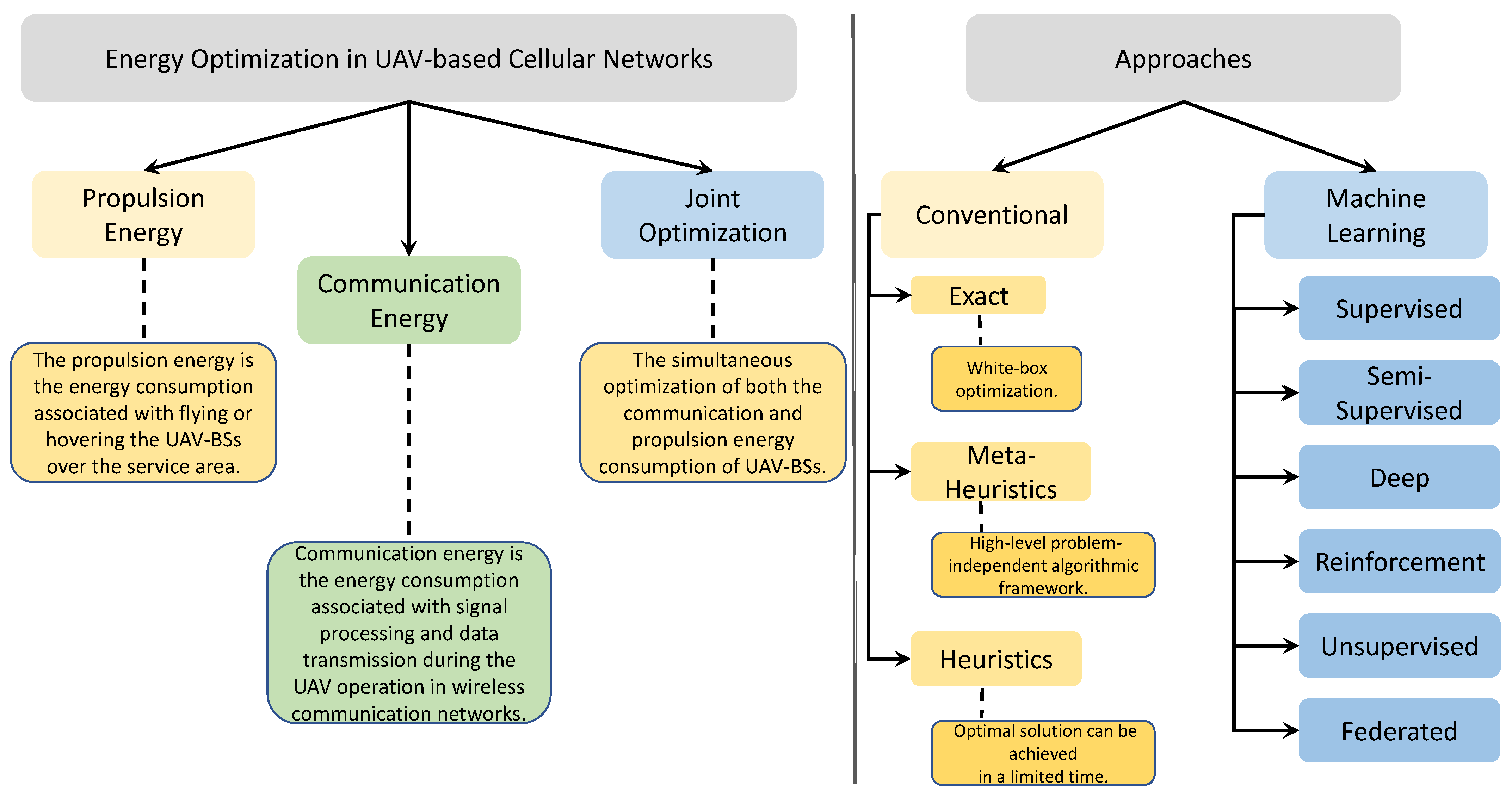

Drones, Free Full-Text

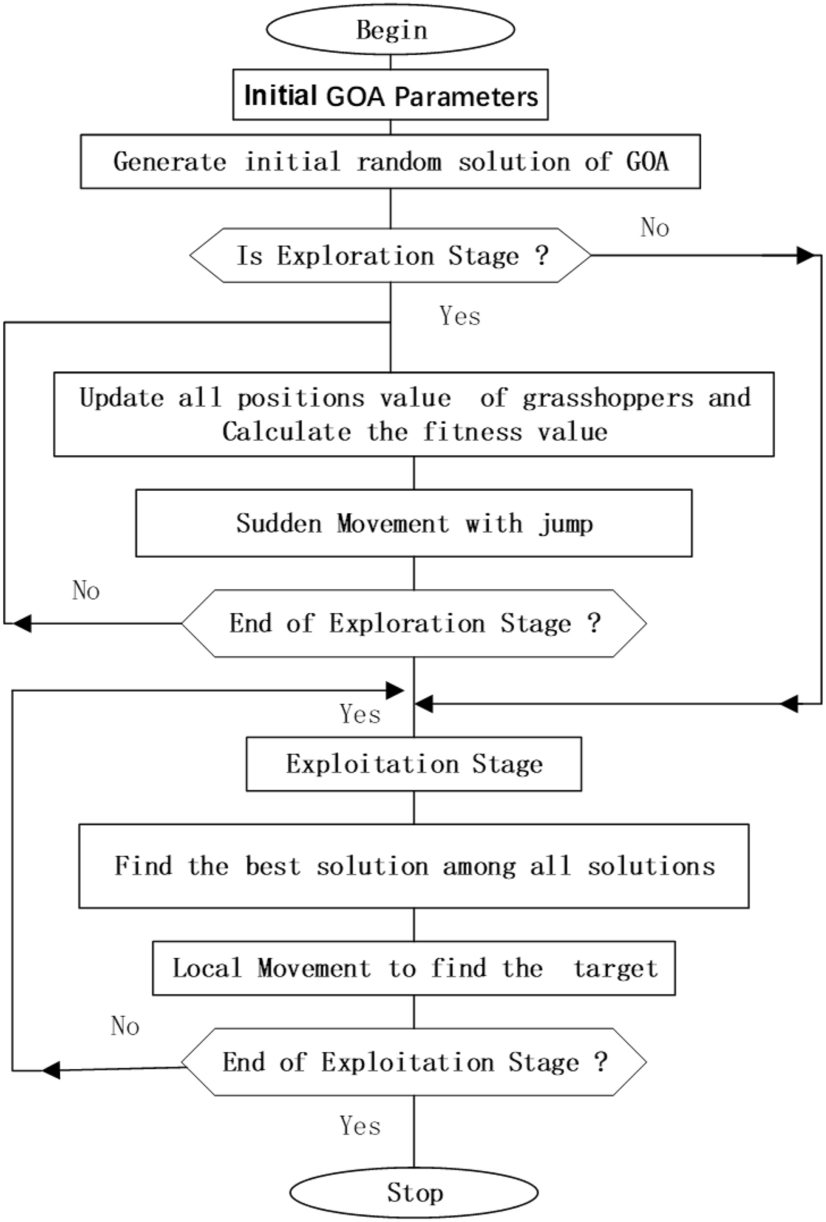

Enhancing grasshopper optimization algorithm (GOA) with levy flight for engineering applications

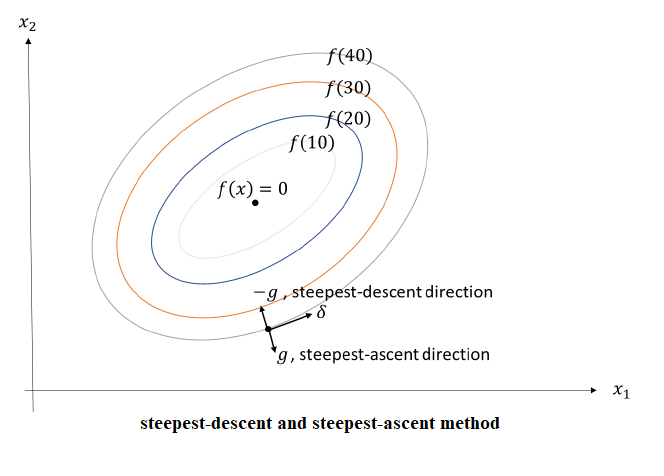

Steepest Descent Methods - NM EDUCATION

Correspondence between neuroevolution and gradient descent

Nonlinear programming - ppt download

de

por adulto (o preço varia de acordo com o tamanho do grupo)