Visualizing the gradient descent method

Por um escritor misterioso

Descrição

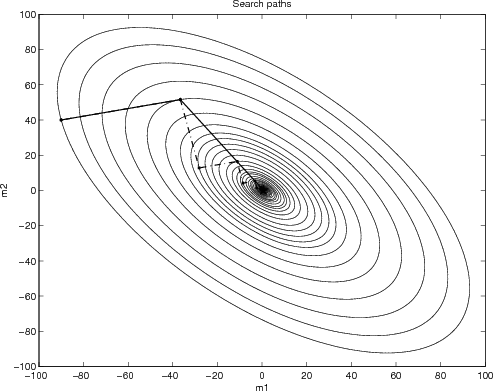

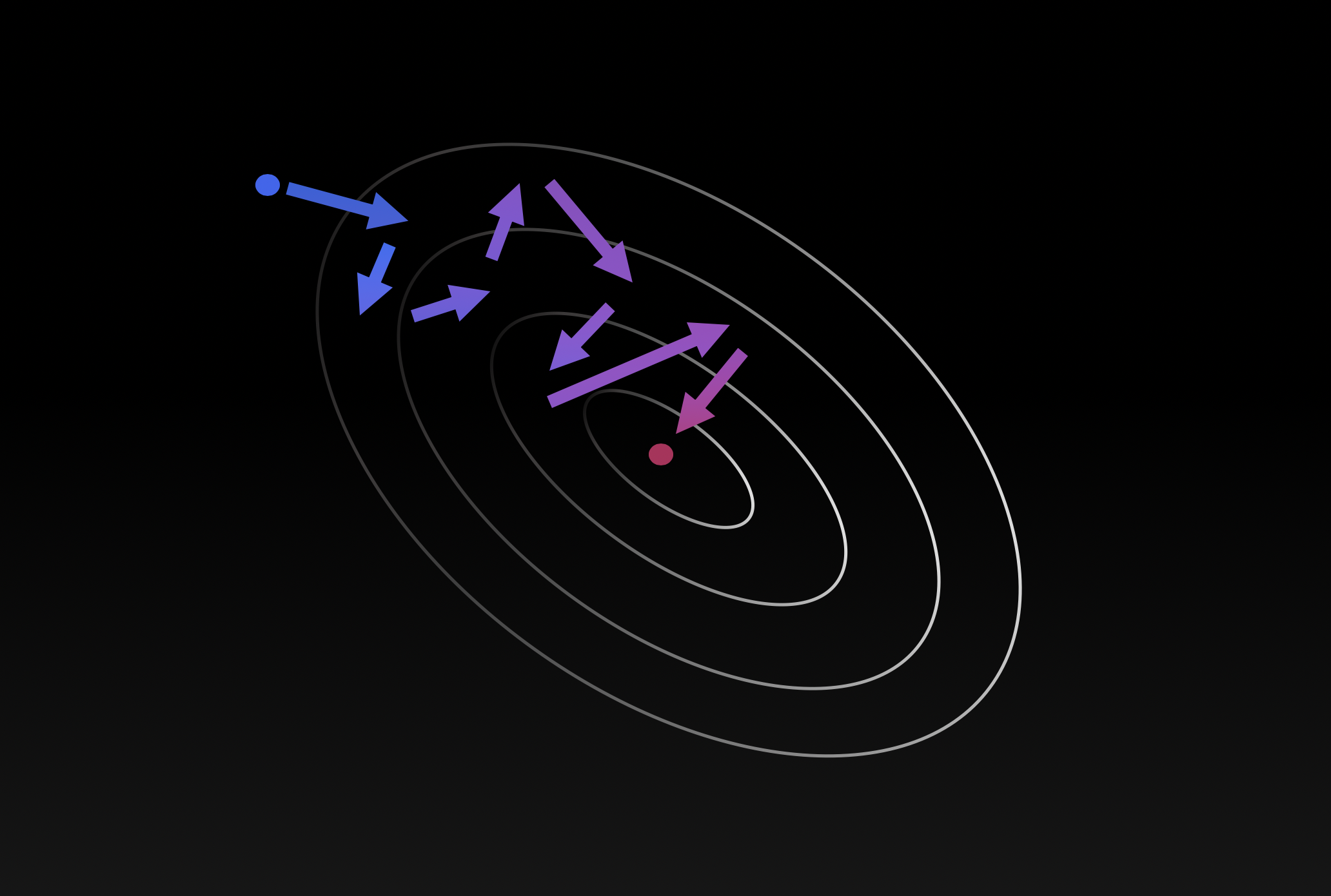

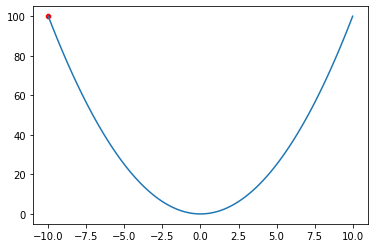

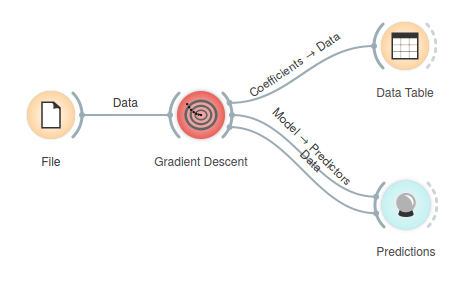

In the gradient descent method of optimization, a hypothesis function, $h_\boldsymbol{\theta}(x)$, is fitted to a data set, $(x^{(i)}, y^{(i)})$ ($i=1,2,\cdots,m$) by minimizing an associated cost function, $J(\boldsymbol{\theta})$ in terms of the parameters $\boldsymbol\theta = \theta_0, \theta_1, \cdots$. The cost function describes how closely the hypothesis fits the data for a given choice of $\boldsymbol \theta$.

Gradient Descent Visualization - Martin Kondor

Gradient Descent vs Adagrad vs Momentum in TensorFlow

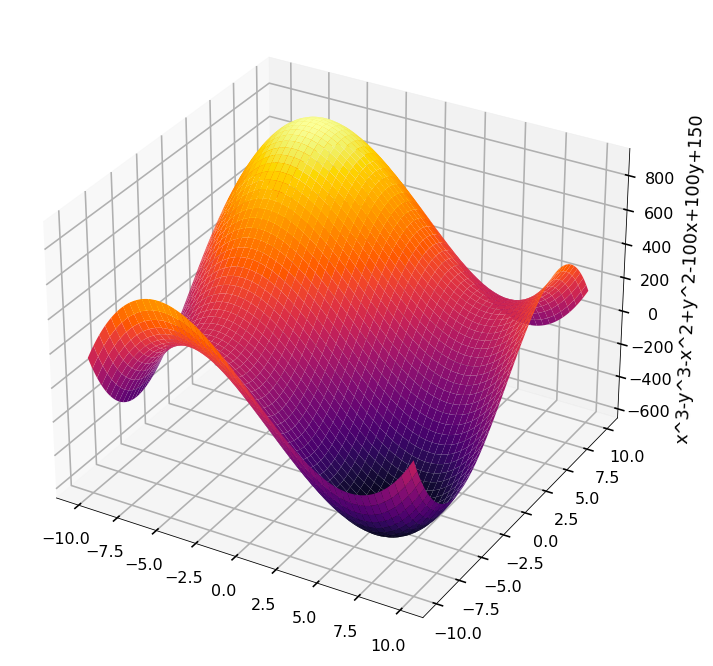

How to visualize Gradient Descent using Contour plot in Python

Gradient Descent in Machine Learning, by Francesco Franco

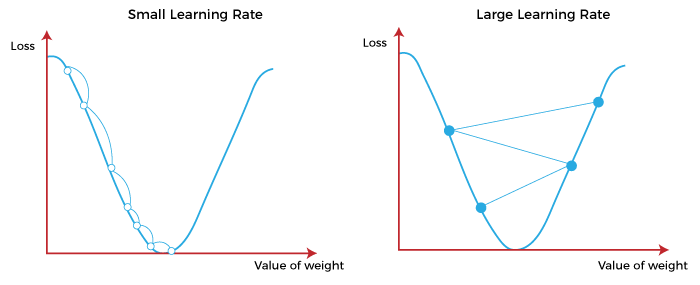

Gradient Descent and its Types - Analytics Vidhya

Gradient descent visualization - plateau

ZO-AdaMM: Derivative-free optimization for black-box problems - MIT-IBM Watson AI Lab

Gradient Descent in Machine Learning - Javatpoint

Understanding Gradient Descent. Introduction, by Necati Demir

Orange Data Mining - Visualizing Gradient Descent

de

por adulto (o preço varia de acordo com o tamanho do grupo)