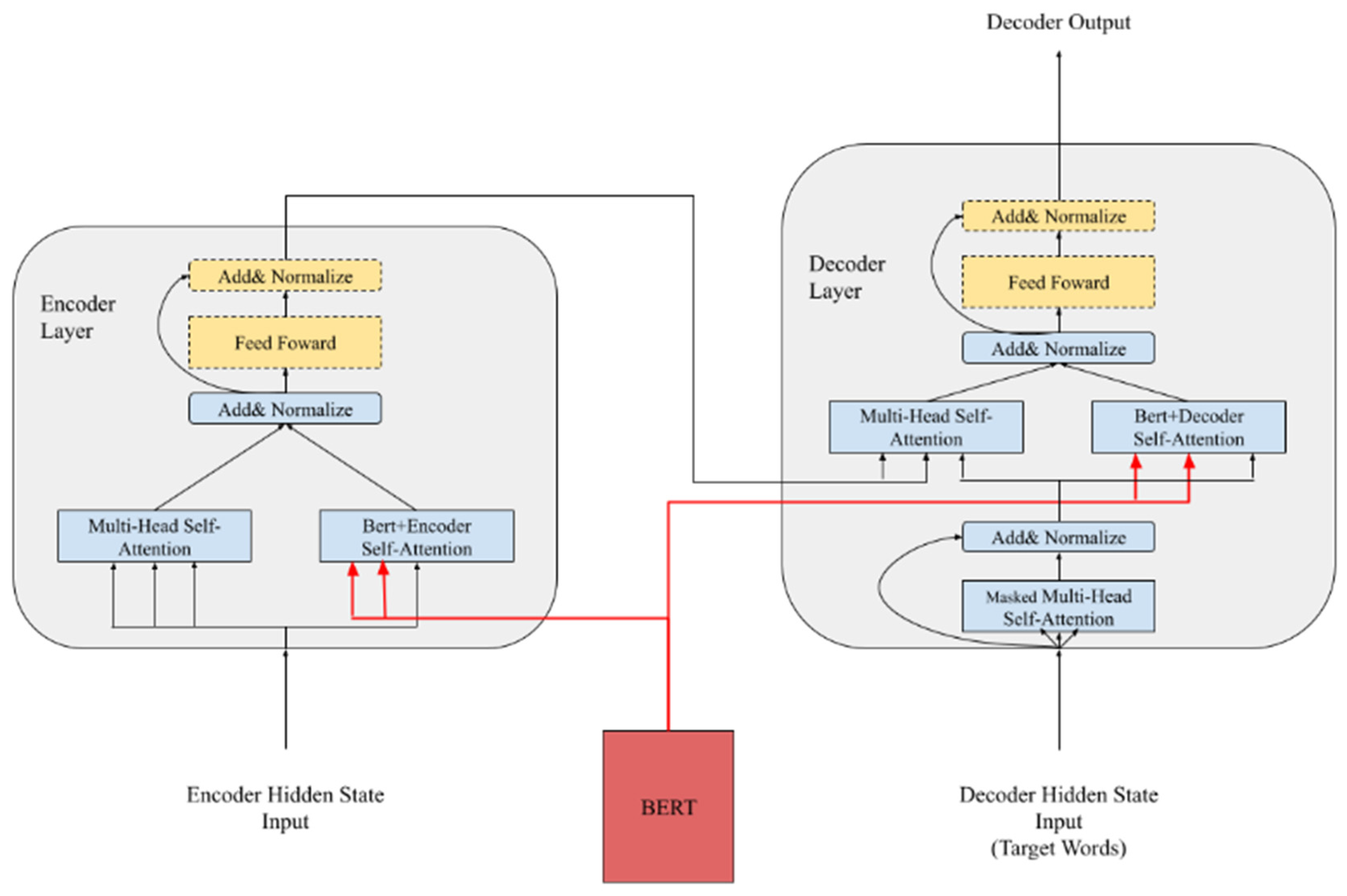

PDF) Incorporating representation learning and multihead attention

Por um escritor misterioso

Descrição

AI, Free Full-Text

AttentionSplice: An Interpretable Multi-Head Self-Attention Based Hybrid Deep Learning Model in Splice Site Prediction

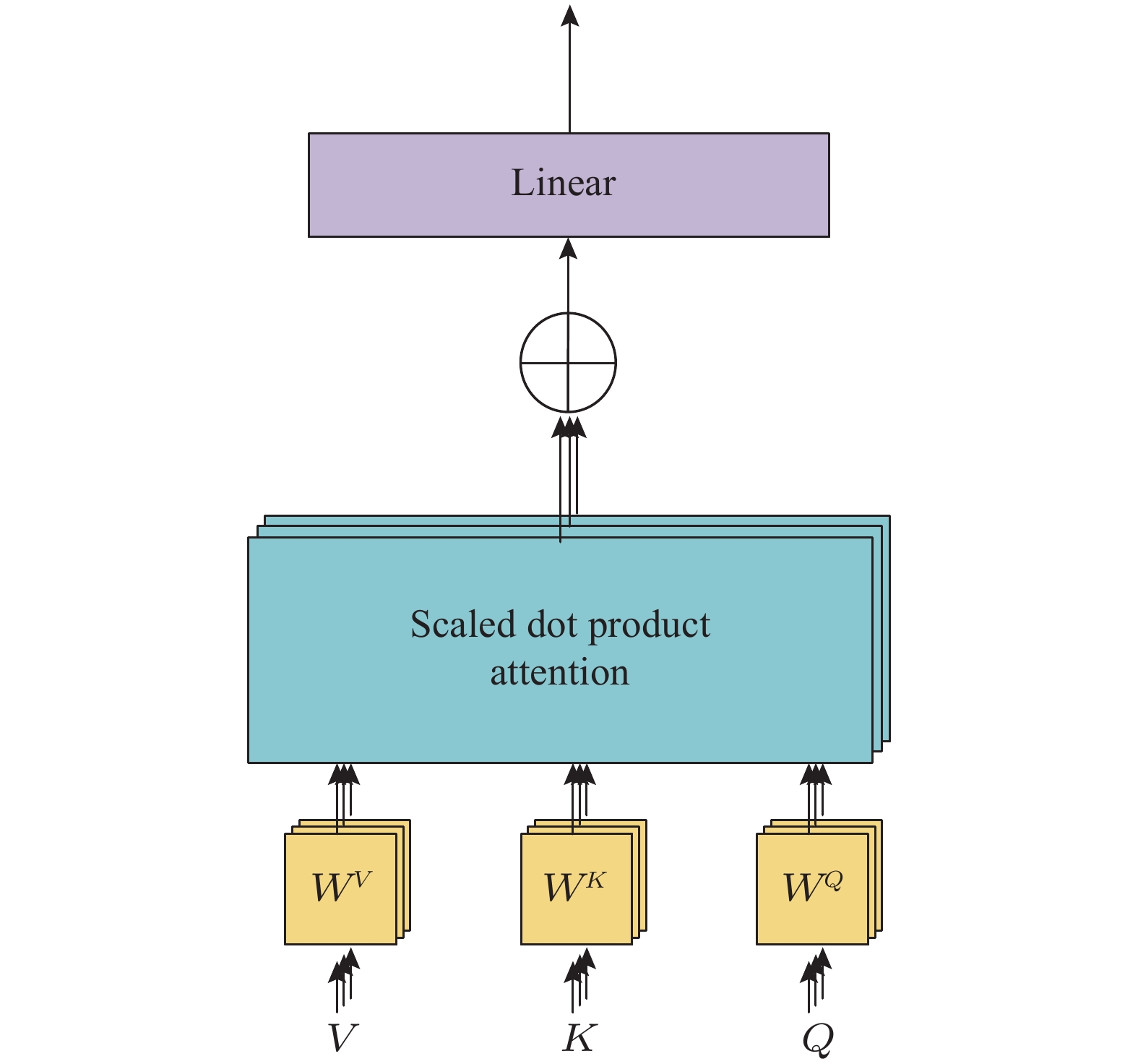

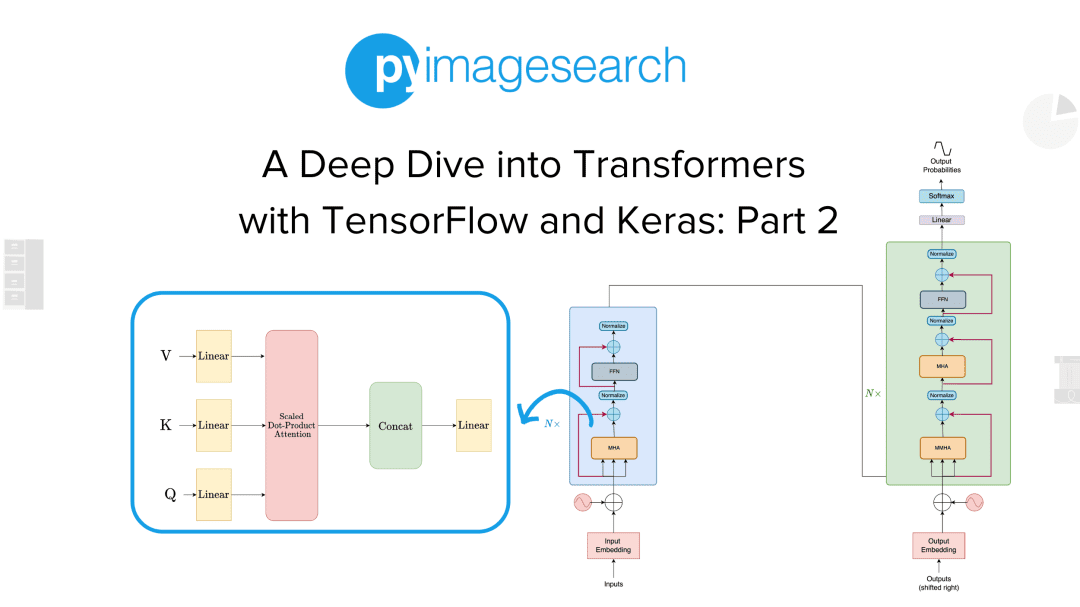

Multi-Head Attention – m0nads

A Deep Dive into Transformers with TensorFlow and Keras: Part 1 - PyImageSearch

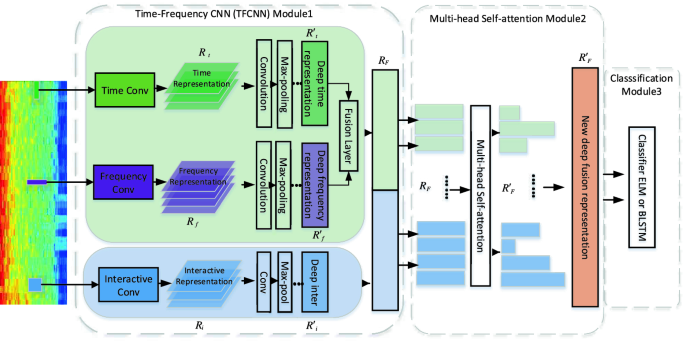

Time-Frequency Deep Representation Learning for Speech Emotion Recognition Integrating Self-attention

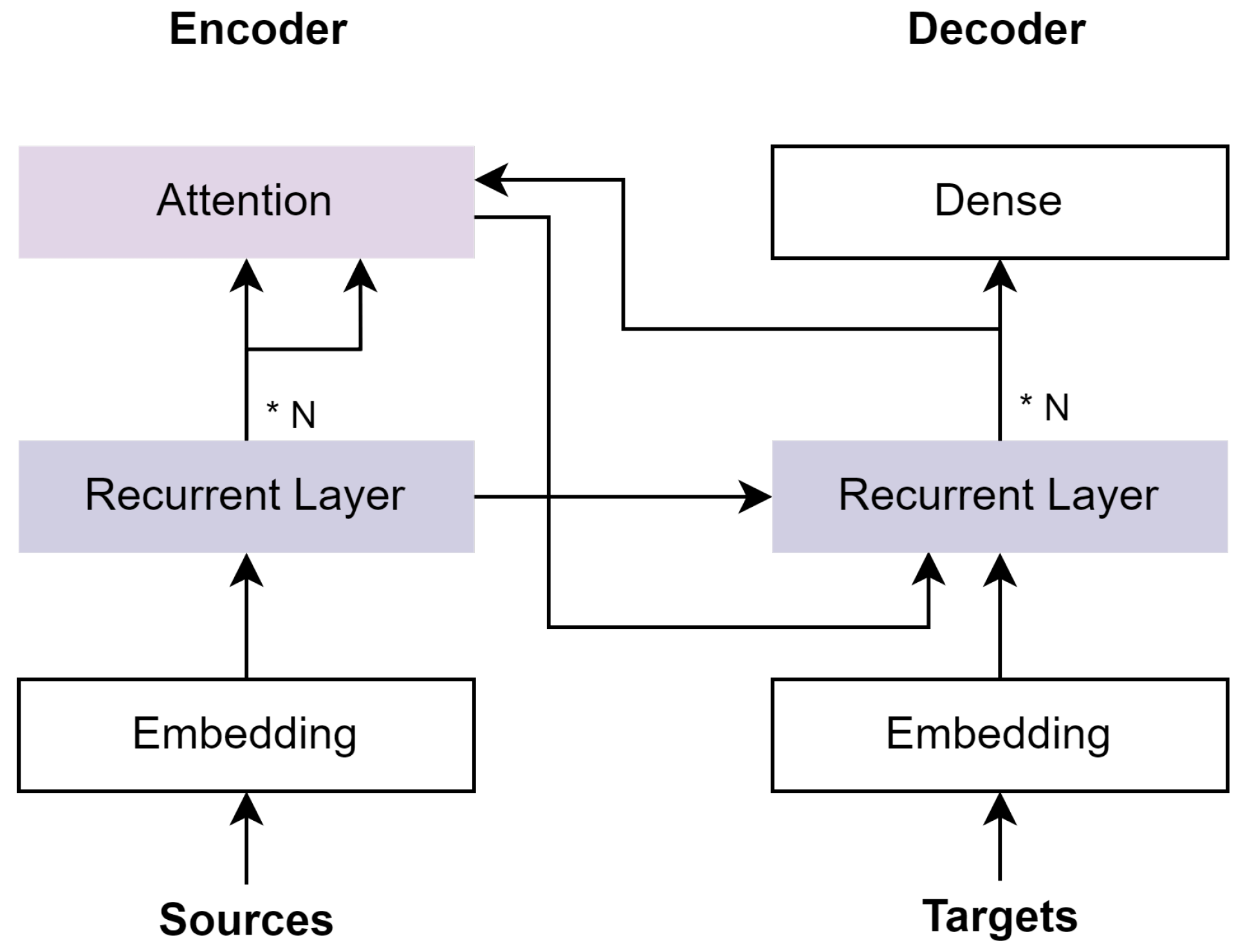

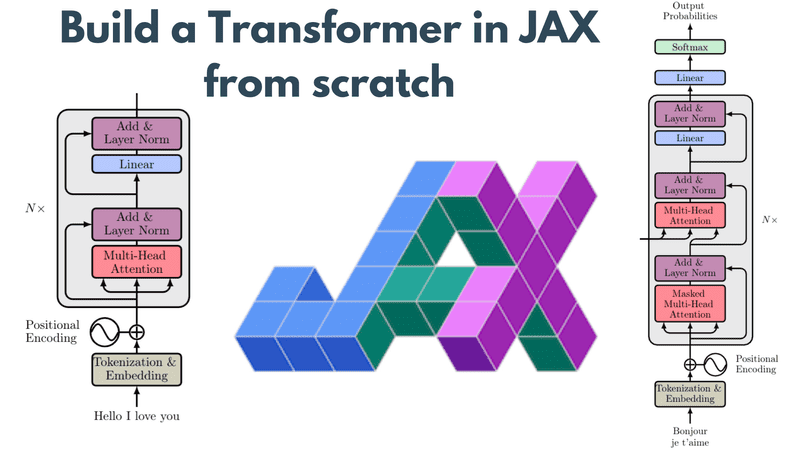

Build a Transformer in JAX from scratch: how to write and train your own models

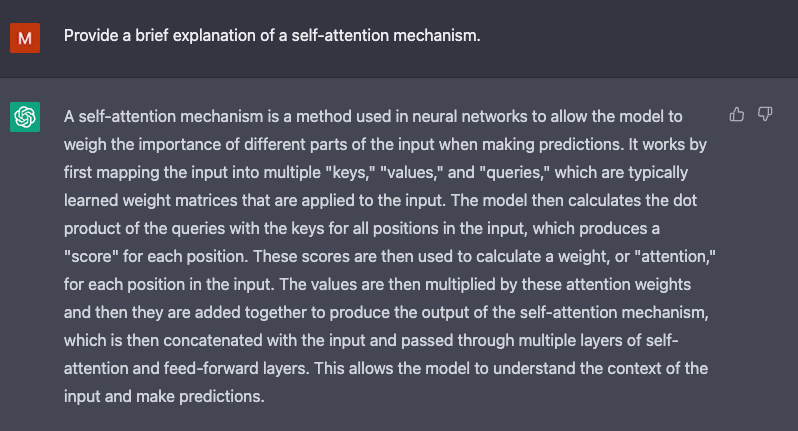

How ChatGPT Works: The Model Behind The Bot, by Molly Ruby

Applied Sciences, Free Full-Text

PDF) Multi-Head Attention with Diversity for Learning Grounded Multilingual Multimodal Representations

A Deep Dive into Transformers with TensorFlow and Keras: Part 2 - PyImageSearch

Tutorial 6: Transformers and Multi-Head Attention — UvA DL Notebooks v1.2 documentation

Frontiers Contrastive self-supervised representation learning without negative samples for multimodal human action recognition

de

por adulto (o preço varia de acordo com o tamanho do grupo)

:max_bytes(150000):strip_icc()/GettyImages-116362568-5bec5dc146e0fb002db19bdd.jpg)