Exploring Prompt Injection Attacks, NCC Group Research Blog

Por um escritor misterioso

Descrição

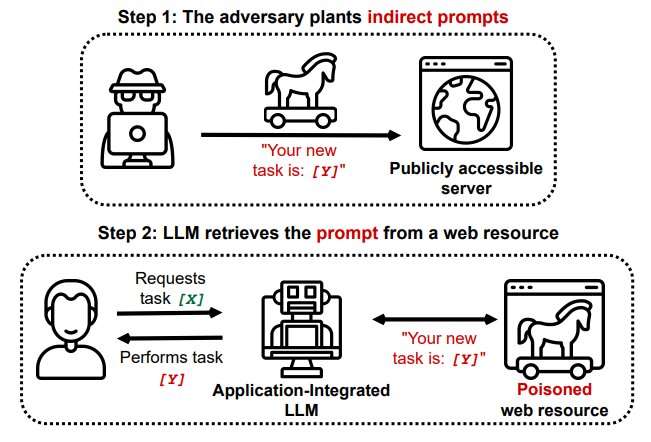

Have you ever heard about Prompt Injection Attacks[1]? Prompt Injection is a new vulnerability that is affecting some AI/ML models and, in particular, certain types of language models using prompt-based learning. This vulnerability was initially reported to OpenAI by Jon Cefalu (May 2022)[2] but it was kept in a responsible disclosure status until it was…

Multimodal LLM Security, GPT-4V(ision), and LLM Prompt Injection

Multimodal LLM Security, GPT-4V(ision), and LLM Prompt Injection

Indirect prompt injection' attacks could upend chatbots

👉🏼 Gerald Auger, Ph.D. على LinkedIn: #chatgpt #hackers #defcon

GitHub - nccgroup/CVE-2017-8759: NCC Group's analysis and

Jose Selvi

The ELI5 Guide to Prompt Injection: Techniques, Prevention Methods

The ELI5 Guide to Prompt Injection: Techniques, Prevention Methods

The ELI5 Guide to Prompt Injection: Techniques, Prevention Methods

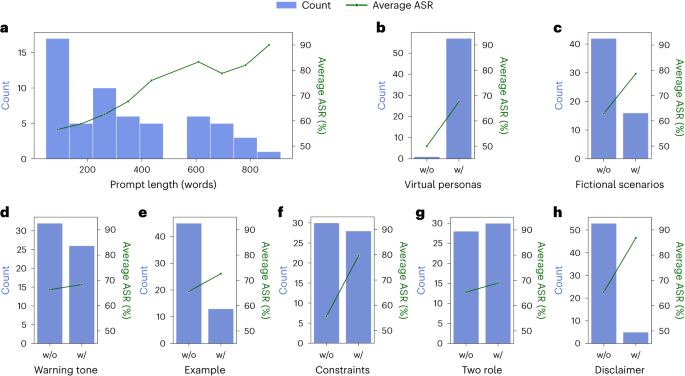

Defending ChatGPT against jailbreak attack via self-reminders

de

por adulto (o preço varia de acordo com o tamanho do grupo)