AGI Alignment Experiments: Foundation vs INSTRUCT, various Agent

Por um escritor misterioso

Descrição

Here’s the companion video: Here’s the GitHub repo with data and code: Here’s the writeup: Recursive Self Referential Reasoning This experiment is meant to demonstrate the concept of “recursive, self-referential reasoning” whereby a Large Language Model (LLM) is given an “agent model” (a natural language defined identity) and its thought process is evaluated in a long-term simulation environment. Here is an example of an agent model. This one tests the Core Objective Function

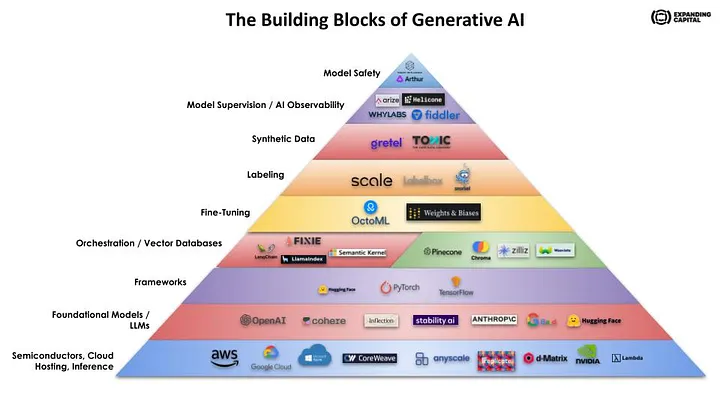

The Substrata for the AGI Landscape

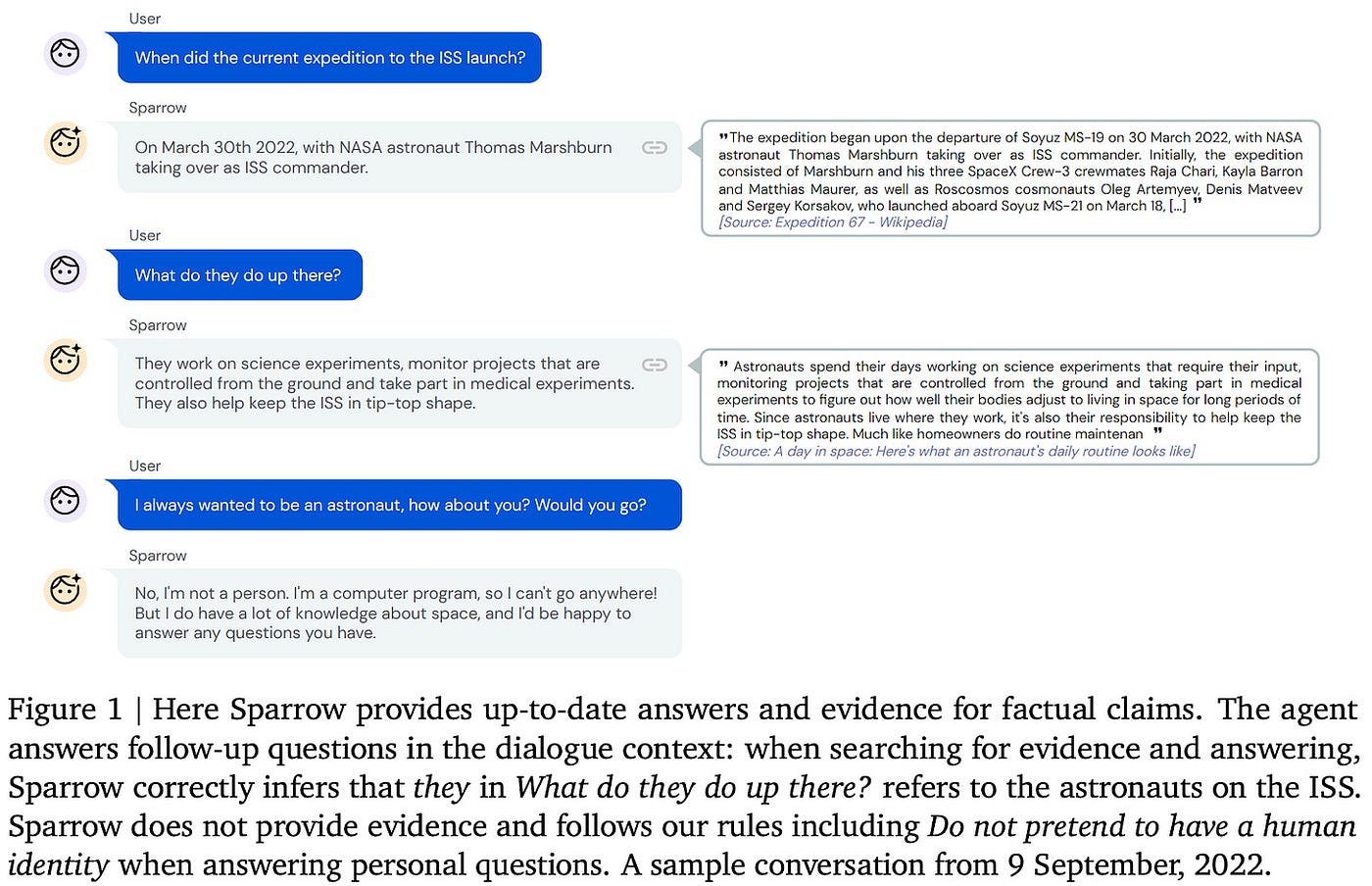

Specialized LLMs: ChatGPT, LaMDA, Galactica, Codex, Sparrow, and More, by Cameron R. Wolfe, Ph.D.

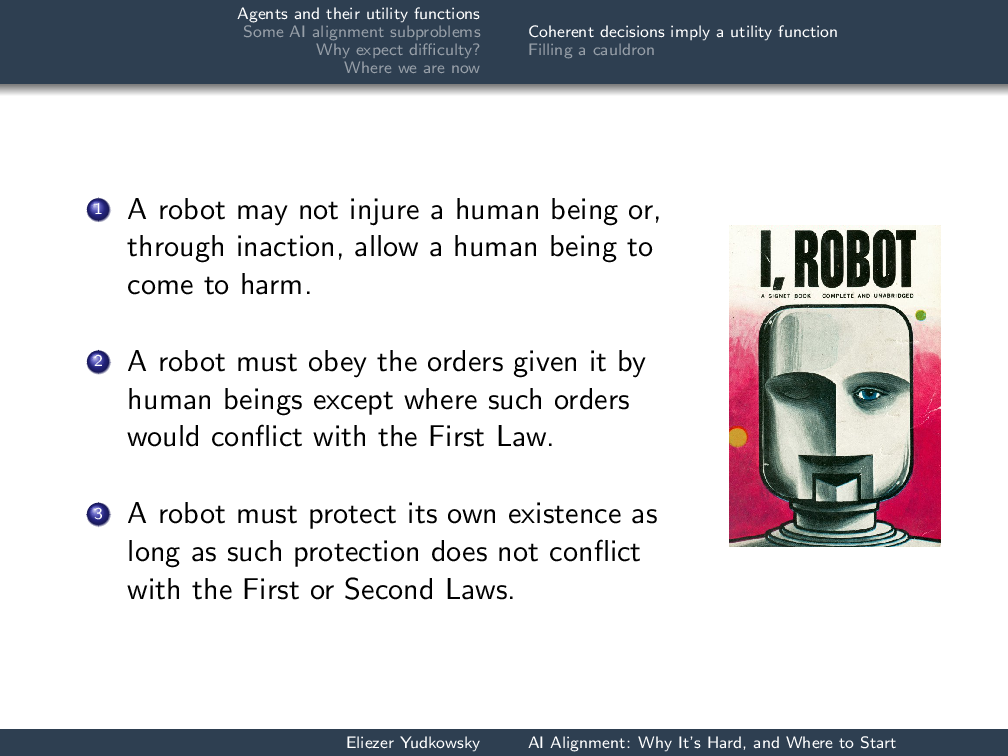

AI Alignment: Why It's Hard, and Where to Start - Machine Intelligence Research Institute

The Tong Test: Evaluating Artificial General Intelligence Through Dynamic Embodied Physical and Social Interactions - ScienceDirect

My understanding of) What Everyone in Technical Alignment is Doing and Why — AI Alignment Forum

AI Foundation Models. Part II: Generative AI + Universal World Model Engine

Science Cast

AGI Alignment Experiments: Foundation vs INSTRUCT, various Agent Models - Community - OpenAI Developer Forum

We Don't Know How To Make AGI Safe, by Kyle O'Brien

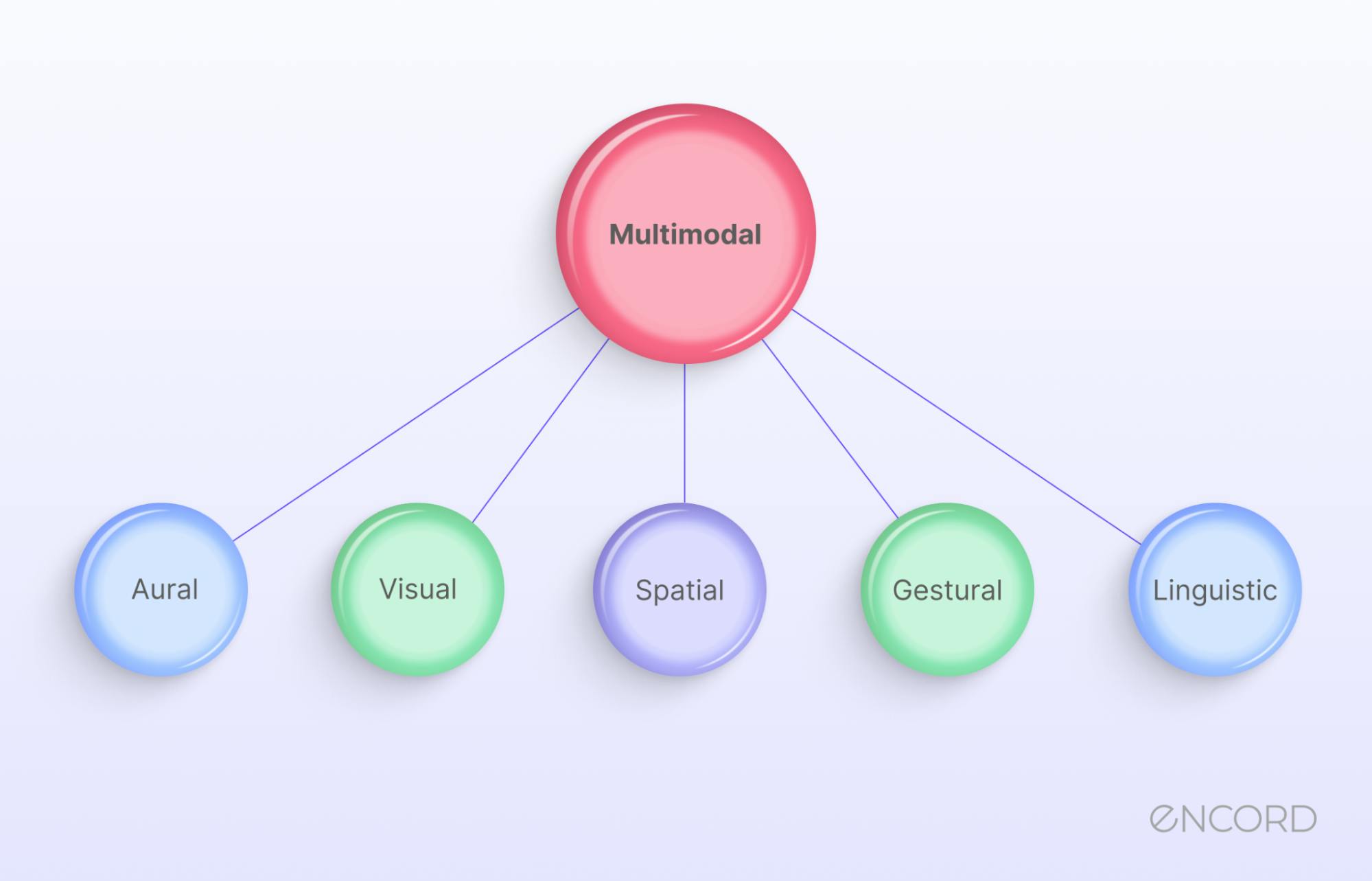

Multimodal Annotation Tools Top Tools

de

por adulto (o preço varia de acordo com o tamanho do grupo)

:strip_icc()/i.s3.glbimg.com/v1/AUTH_bc8228b6673f488aa253bbcb03c80ec5/internal_photos/bs/2021/U/2/Gxg9SdSA26YRZSrkPJnw/patentes-free-fire.png)