Six Dimensions of Operational Adequacy in AGI Projects — LessWrong

Por um escritor misterioso

Descrição

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

25 of Eliezer Yudkowsky Podcasts Interviews

OpenAI, DeepMind, Anthropic, etc. should shut down. — LessWrong

OpenAI, DeepMind, Anthropic, etc. should shut down. — EA Forum

2022 (and All Time) Posts by Pingback Count — LessWrong

Ideas for AI labs: Reading list — LessWrong

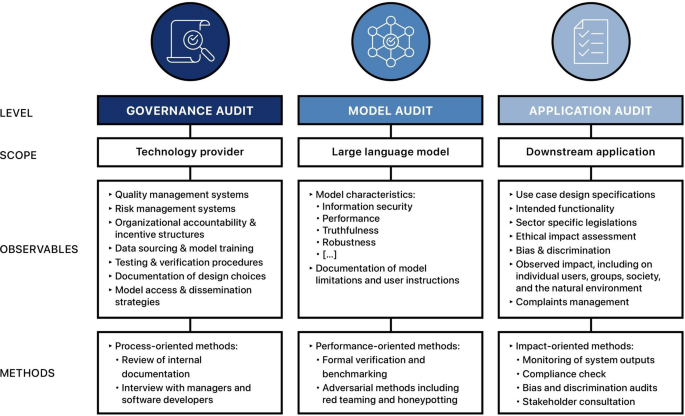

Auditing large language models: a three-layered approach

AGI Ruin: A List of Lethalities — AI Alignment Forum

Challenges to Christiano's capability amplification proposal

Security Mindset - LessWrong

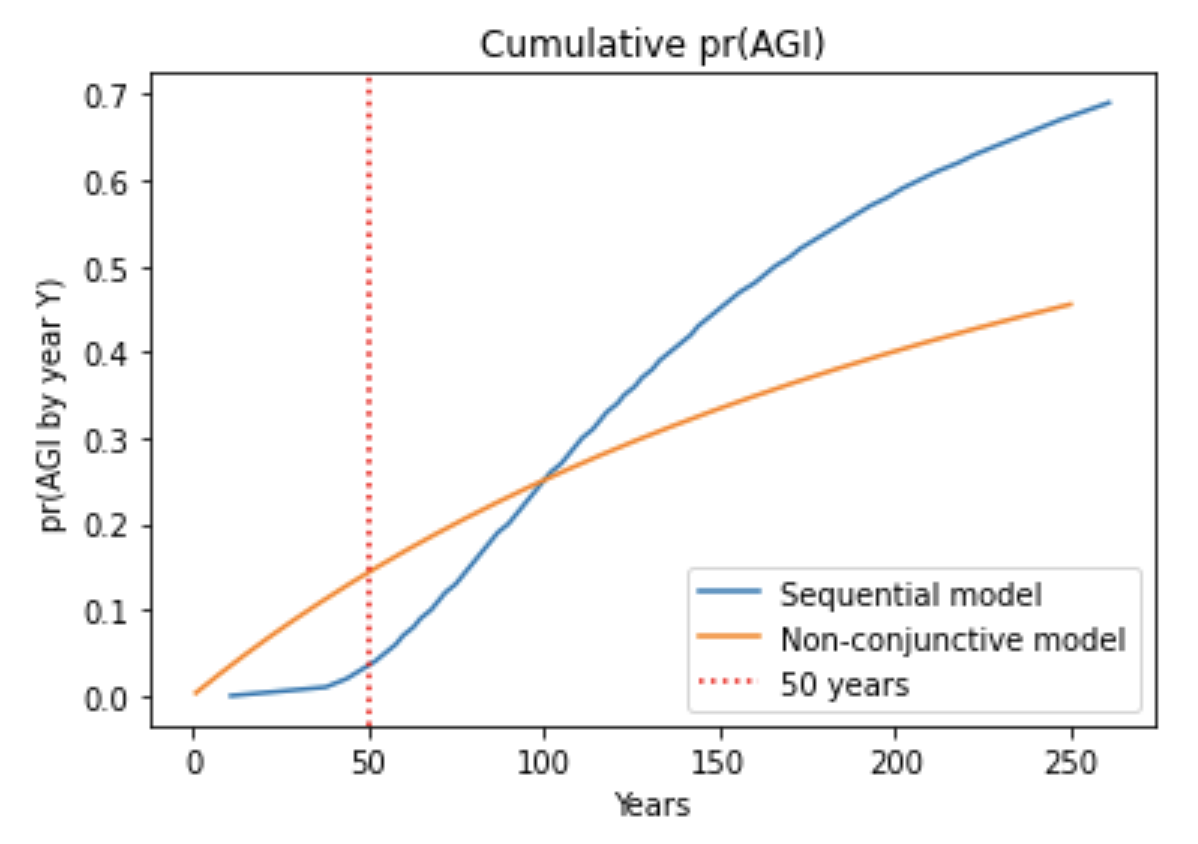

Semi-informative priors over AI timelines

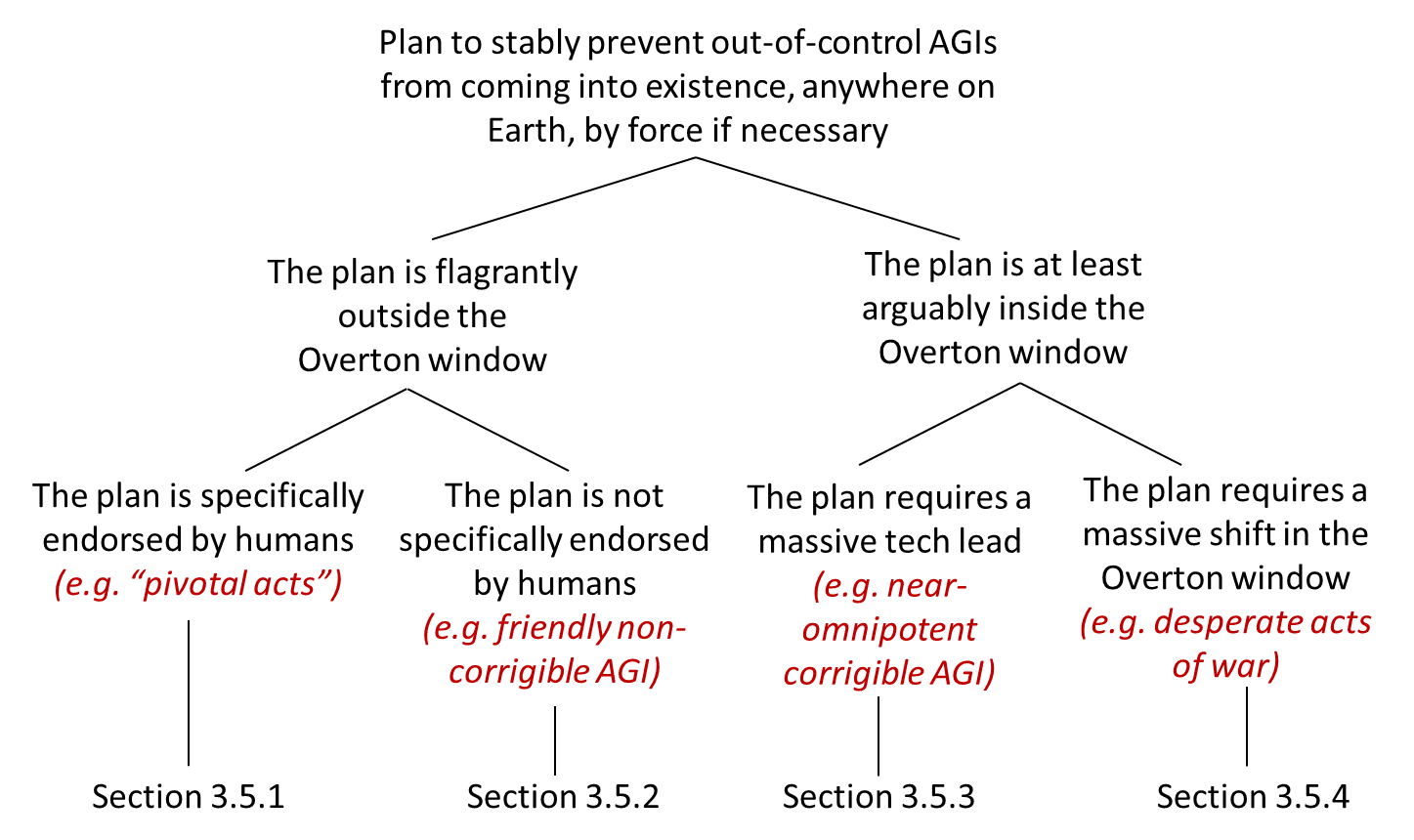

What does it take to defend the world against out-of-control AGIs

MIRI announces new Death With Dignity strategy - LessWrong 2.0

Without specific countermeasures, the easiest path to

25 of Eliezer Yudkowsky Podcasts Interviews

de

por adulto (o preço varia de acordo com o tamanho do grupo)