Efficient and Accurate Candidate Generation for Grasp Pose

Por um escritor misterioso

Descrição

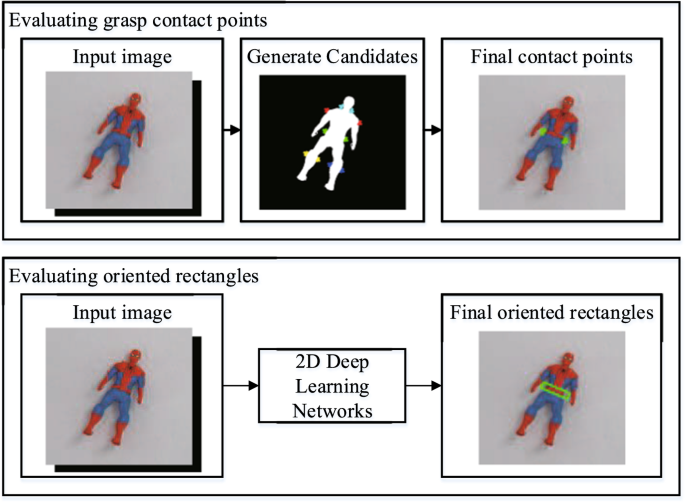

Recently, a number of grasp detection methods have been proposed that can be used to localize robotic grasp configurations directly from sensor data without estimating object pose. The underlying idea is to treat grasp perception analogously to object detection in computer vision. These methods take as input a noisy and partially occluded RGBD image or point cloud and produce as output pose estimates of viable grasps, without assuming a known CAD model of the object. Although these methods generalize grasp knowledge to new objects well, they have not yet been demonstrated to be reliable enough for wide use. Many grasp detection methods achieve grasp success rates (grasp successes as a fraction of the total number of grasp attempts) between 75% and 95% for novel objects presented in isolation or in light clutter. Not only are these success rates too low for practical grasping applications, but the light clutter scenarios that are evaluated often do not reflect the realities of real world grasping. This paper proposes a number of innovations that together result in a significant improvement in grasp detection performance. The specific improvement in performance due to each of our contributions is quantitatively measured either in simulation or on robotic hardware. Ultimately, we report a series of robotic experiments that average a 93% end-to-end grasp success rate for novel objects presented in dense clutter.

Vision-based robotic grasping from object localization, object

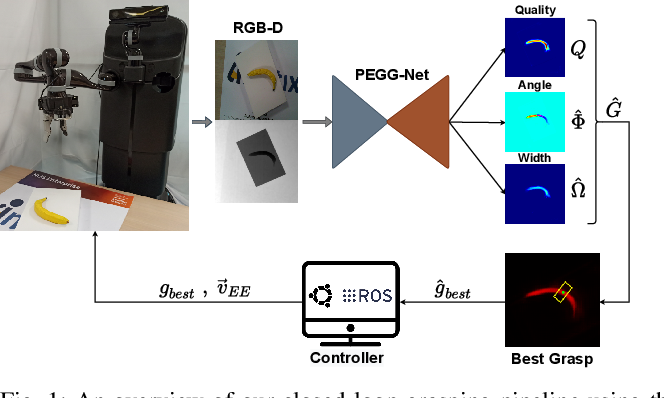

PEGG-Net: Background Agnostic Pixel-Wise Efficient Grasp

Deep learning for detecting robotic grasps - Ian Lenz, Honglak Lee

Grasp detection via visual rotation object detection and point

During training, the encoder maps each grasp to a point z in a

Biomimetics, Free Full-Text

GitHub - kidpaul94/My-Robotic-Grasping: Collection of Papers

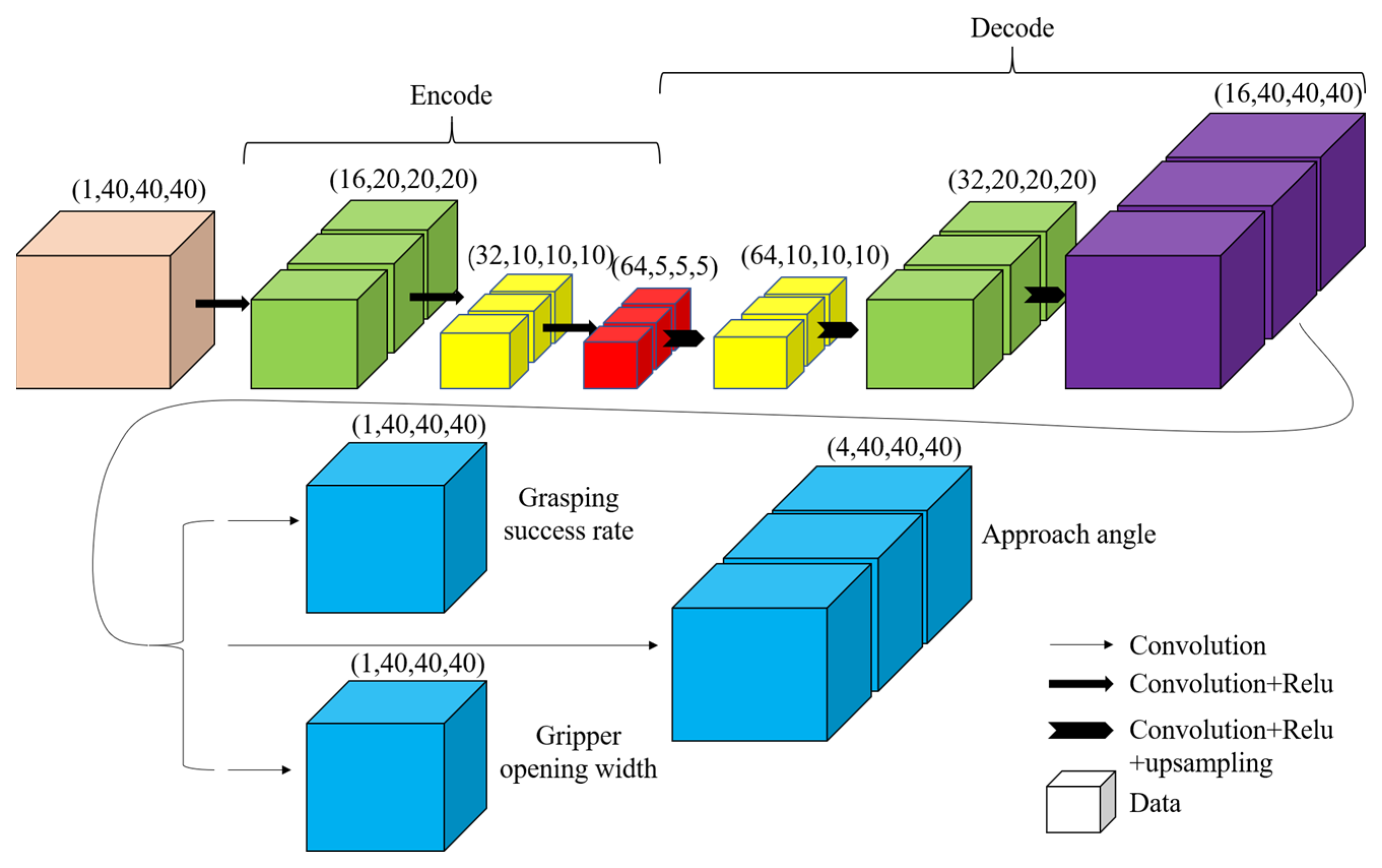

A grasps-generation-and-selection convolutional neural network for

Sensors, Free Full-Text

Overview of robotic grasp detection from 2D to 3D - ScienceDirect

Machines, Free Full-Text

Examples for grasp generation on single primitives. A sphere

Dex-Net 2.0 pipeline for training dataset generation. (Left) The

The architecture of GraspCVAE. (a) In training, it takes both

de

por adulto (o preço varia de acordo com o tamanho do grupo)